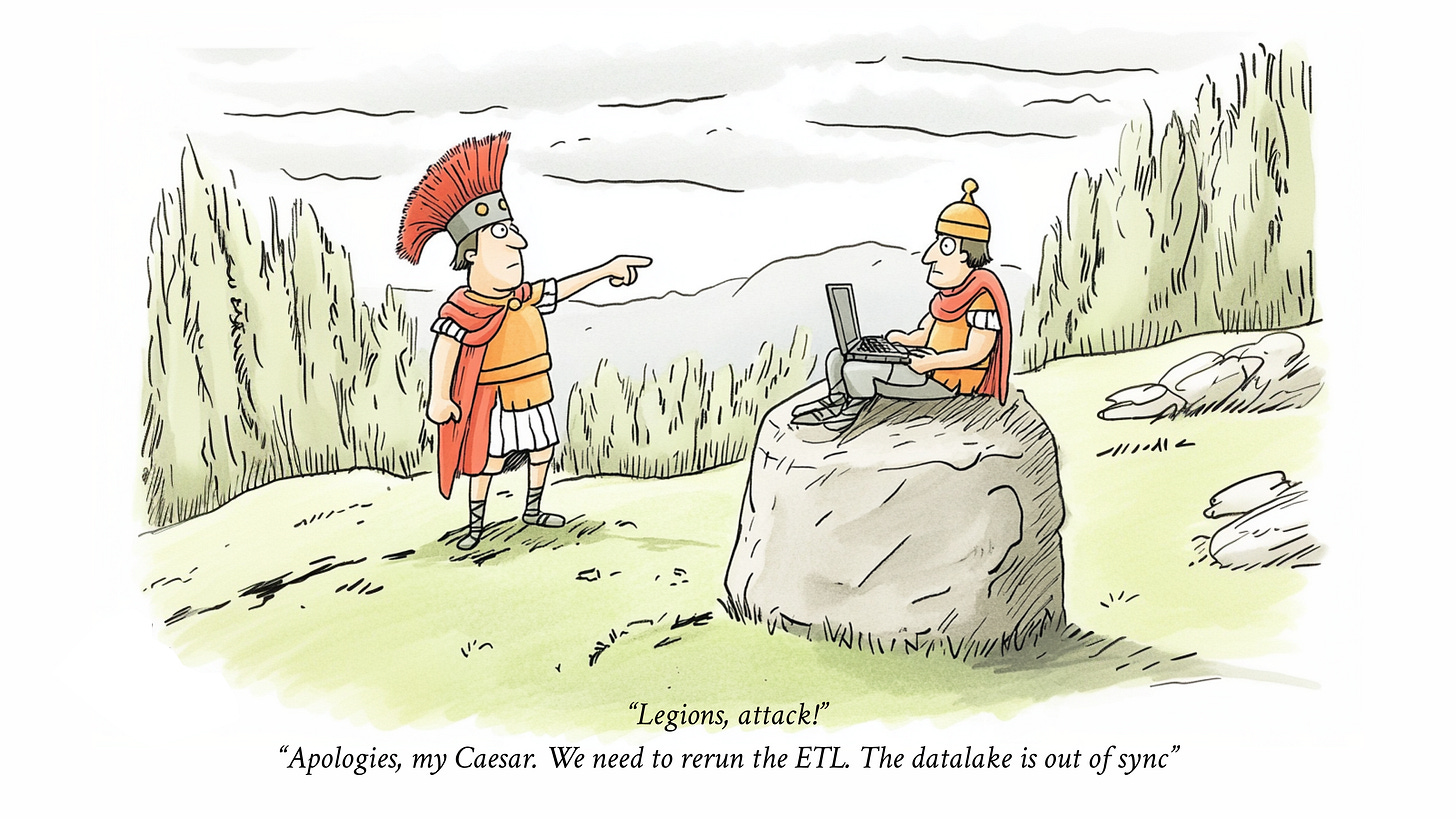

Your data stack is too complicated

also titled "Who's the dbt to your ETL and the E/L to your Looker if your Databricks isn't properly set up with your Marketo"

The spirit of industry sometimes gets the best of us, and this time around, we've made things unnecessarily complicated. I think this is an opportunity.

We’ve arrived at a point where the data landscape is a maze of tools, each serving a very specific purpose but often leading to a tangled web of integrations. The result? An overwhelming number of back-office processes that need to be managed, maintained, and understood just to keep things running.

Simplifying things by building a magical UX

Instead of building a complex ecosystem of tools that need constant upkeep, what if we frontloaded more of these processes directly into our applications? What if, rather than relying on a mess of back-office data tools, we designed our systems to handle data transformation and integration closer to the user-facing side of things?

Efficiency: When data processes are embedded in the core application flow, it reduces the need for constant back-end juggling. You can streamline operations and focus more on delivering value to users without getting bogged down by back-office complexities.

User Experience: By frontloading data processes, you're ensuring that data is processed and presented in real-time, enhancing the user experience. This means more accurate, timely, and relevant information right where it’s needed.

Less Maintenance: Reducing reliance on a sprawling network of tools means less maintenance. When fewer moving parts are involved, it’s easier to maintain consistency, troubleshoot issues, and scale your systems.

If we rethink our approach and bring data processes closer to the application layer, we can cut through the clutter and complexity of the data tool market. Instead of managing an increasingly complicated web of integrations, we can focus on building systems that are inherently more robust and user-friendly.

By simplifying the architecture and placing the emphasis on front-loaded processes, we can create a more direct path from data to decision-making—without the detour through a dozen different platforms.

Stochastic Computing to the Rescue: Structuring Data at the Point of Entry

In traditional data workflows, data cleanup and structuring often happen as a back-office process—an expensive, time-consuming endeavor that demands constant attention. But what if we could flip the script? What if the messy, unstructured data could be cleaned, transformed, and structured the moment it enters your system, right at the edge?

This is where bem steps in: right in the hot path, creating a new product category that shifts the paradigm. Our approach embeds data transformation directly within your production application. Instead of sending unstructured data into a complex, backend-heavy pipeline, bem enables your application to process and clean data instantly as it arrives.

A New Standard in Data Management

bem represents a shift towards smarter, more integrated data management solutions. Instead of retrofitting data processes after the fact, we focus on structuring data right from the start, embedded in the flow of your application. This approach not only streamlines your operations but also ensures that your data is always ready to drive decisions without delays or complications.

By embracing this new paradigm, you can move away from the tangled mess of traditional data tools and towards a more elegant, efficient, and user-centric approach. Data doesn’t have to be an afterthought—it can be a seamless part of your application’s core functionality, enhancing the experience and driving value from the moment it enters your system.